Research

The aim of my research is to use advanced Computer Vision, Sensory Fusion and Machine Learning algorithms for Digital Health. I’m currently collaborating with the SPHERE Project, which aims at using common sensors like cameras and accelerometers deployed in people’s homes to monitor the occupants’ general health. My aim is to analyse the data and come up with creative algorithms to understand the occupants’ health while protecting their privacy.

Some of the most recent projects I worked on include:

- Inertial Hallucinations

- Sit-to-Stand analysis

- Matching video data with wearables

- Calorie estimation from video

Previous work during my PhD can be found here.

Inertial Hallucinations

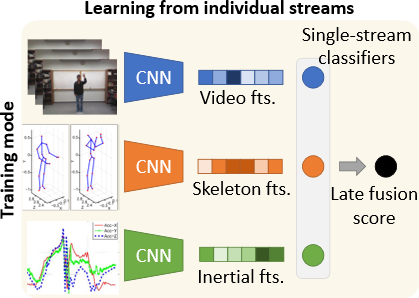

The aim of this research project was to find a better way to exploit multi-sensory data in the context of Ambient Assisted Living (AAL). Imagine an elderly person living in a house monitored with AAL sensors, like cameras and wearable accelerometers. Our research shows that using both cameras and accelerometers in a deep learning sensory fusion algorithm, we can detect Activities of Daily Living (ADL) with an accuracy of 97%. This, however, requires the subject to be wearing an accelerometer while being in front of the cameras’ field of view, which is normally quite limited and only covers a few rooms (not bathroom or bedroom). As soon as the participant leaves the field of view of the cameras, the best accuracy in detecting ADL using accelerations only drops to 83% (from 97%!). What can we do to improve these scores while using sensory fusion?

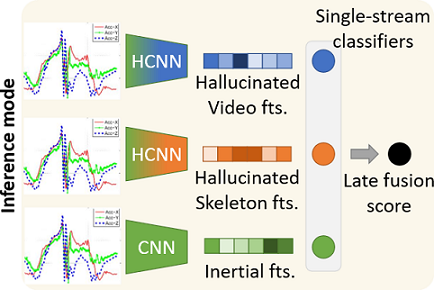

We experimented with the frameworks of Learning Using Privileged Information (LUPI) and Modality Hallucination. In brief, we created a deep learning model that takes in input accelerometer data and produces in output (hallucinated) video features. Whenever the subject is in front of the camera, we use a standard multi-sensory fusion approach.

As soon as they leave the camera view, we replace the missing modality with the hallucinated model. This novel approach allowed us to boost the classification accuracy of ADL, using inertial data only at inference time, from 83% up to 90%, setting a new state of the art on two different datasets.

To read more about this project, please check out this publication:

Inertial Hallucinations - When Wearable Inertial Devices Start Seeing Things

Masullo A., Perrett T., Burghardt T., Damen D. & Mirmehdi M.

June 2022, Pending Review.

Sit-to-Stand analysis

One of the ideas we pursued for the HEmiSPHERE project is to focus on people with mobility issues (i.e. hips or knees surgery patients) and to analyse their peculiar way of standing up. The idea behind this project is that people stand up several times during the day and their speed of standing up is correlated with their physical health. We therefore installed Kinect cameras in the subjects’ own living rooms and we pointed them at sofas or armchairs. The cameras only record silhouettes to protect the privacy of the patients.

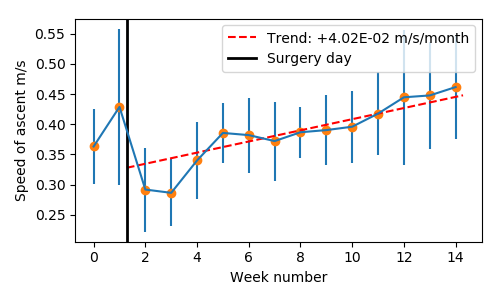

We then wrote an algorithm that uses Convolutional Neural Network to automatically identify stand-up video clips and we analysed the changes in body height (i.e. bounding box) to measure their speed of ascent. This speed of ascent can be measured for several weeks before and after the surgery and can be plotted to show interesting trends.

If we plot the speed of ascent before and after the surgery we can see that the person stands up much more slowly soon after the surgery (vertical black line). This is probably due to the impairment caused by pain after the surgery. However, soon after the surgery, we can see a slow but steady increasing trend in the stand-up speed, which results with a final speed that is higher than before the surgery, confirming that the operation was successful.

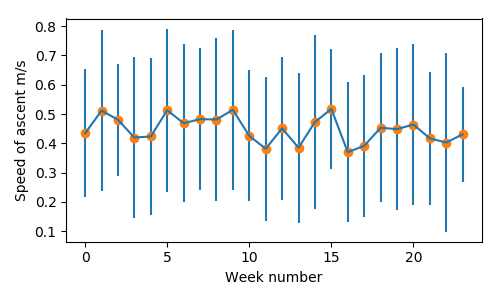

For comparison, if we look at a healthy participant for a period of 5 months we can see that no particular trend is present in their way of standing up. To read more about this project, please check out this publication:

Sit-to-Stand Analysis in the Wild Using Silhouettes for Longitudinal Health Monitoring

Masullo A., Burghardt T., Perrett T., Damen D. & Mirmehdi M.

August 2019, Lecture Notes in Computer Science (ICIAR).

Matching video data with wearables

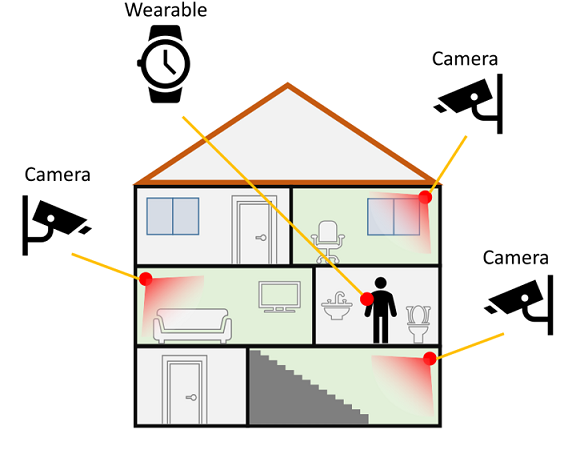

One of the main objectives of the SPHERE Project is to achieve health monitoring through the use of non-medical devices. In order to achieve this, we need to fuse information from multiple sensors so that they can cooperate and increase reliability. This aim of this project is to enable the fusion of video (silhouettes) and wearable (accelerometers) devices so that they can be used conjunctly.

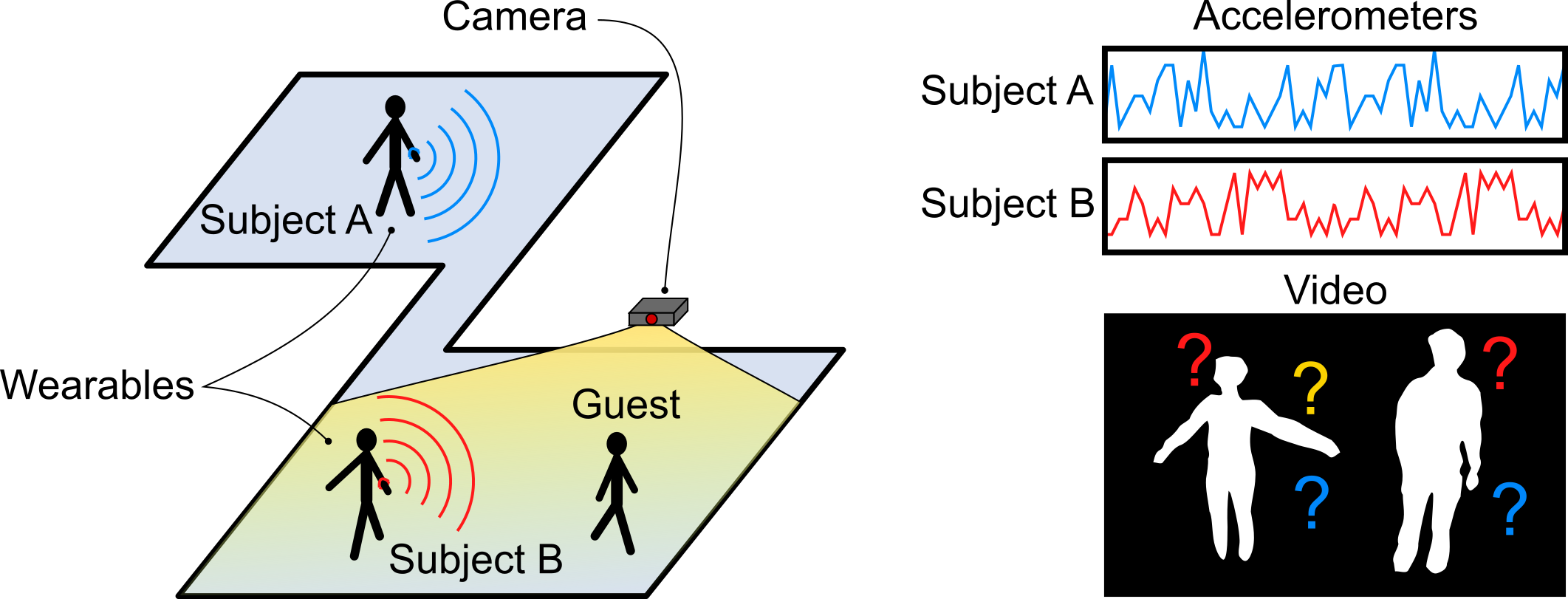

The main problem is illustrated in the following figure:

Let’s consider three people in a house, two are wearing a wearable and one is a guest, only two people are in front of the camera. From the video cameras, we can only produce silhouettes for privacy reasons and people cannot be easily recognised from them. How do we know who appears in the image? The accelerometers that we read from the wearables are directly associated with the subjects, but the video data is not, as anyone can appear in front of the camera.

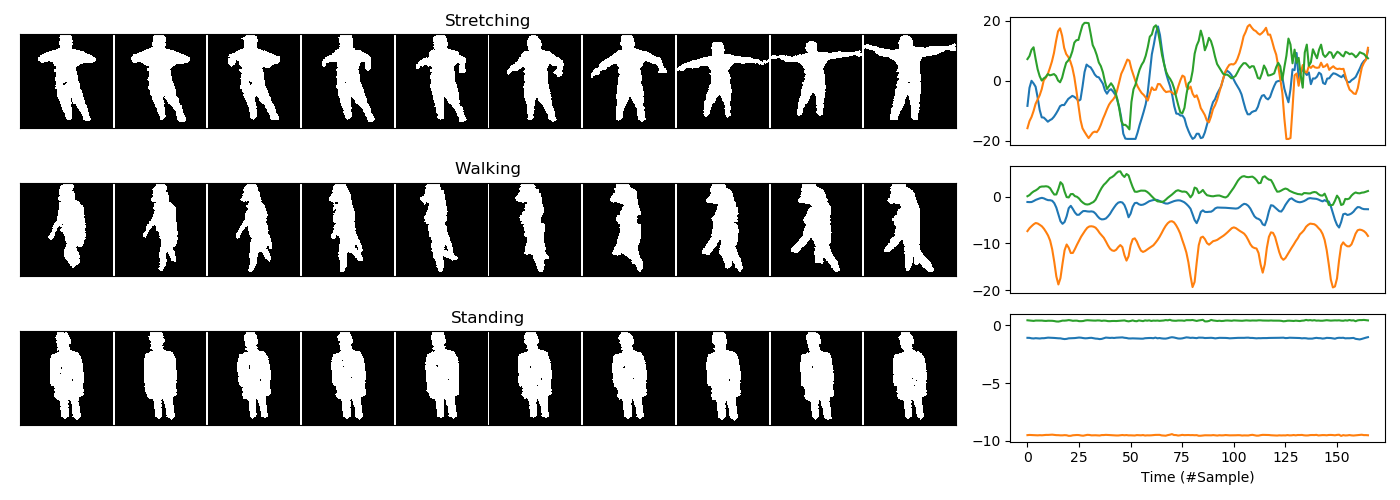

The way we tackle this problem is by looking at the motion. If the silhouette appears as walking we can expect a certain pattern in the accelerometer, whereas if the person is still we expect no motion.

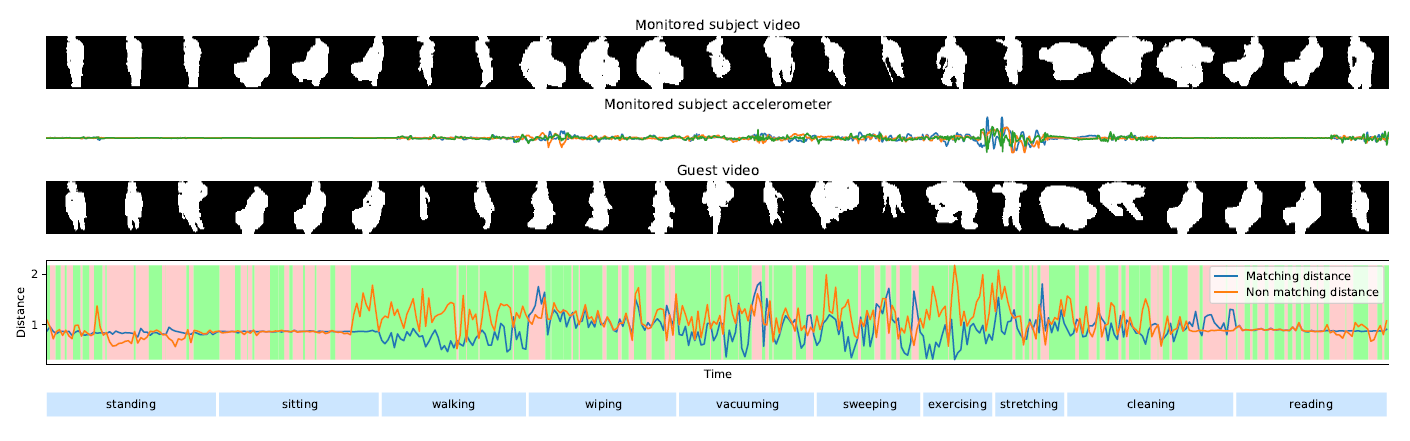

Based on this idea we developed a Deep Neural Network that transforms both video and accelerometer streams into feature vectors that can be easily compared. If the video and accelerometer feature vectors as similar, they must be matching.

Results show that this algorithm works really well when the subjects in front of the camera are moving but it doesn’t perform well if they’re all still.

The solution to this problem is simply tracking every person’s movement in front of the camera so that the matching is resolved during movement and is tracked over segments with no motion. To read more about this project, please check out this publication:

Person Re-ID by Fusion of Video Silhouettes and Wearable Signals for Home Monitoring Applications

Masullo A., Burghardt T., Damen D., Perrett T. & Mirmehdi M.

April 2020, MDPI Sensors

Calorie estimation from video

The amount of calories burnt during the day is an important estimation of the activity level and can be seen as a proxy measure of general health. Smartwatches and fitness trackers do a great job at measuring calories burnt while doing sport, but they struggle to work with standard indoor activities like cleaning, vacuuming or tidying up. For this project, the idea was to create an algorithm that uses a camera to understand movements and activities in an indoor environment and automatically estimates the calories burnt.

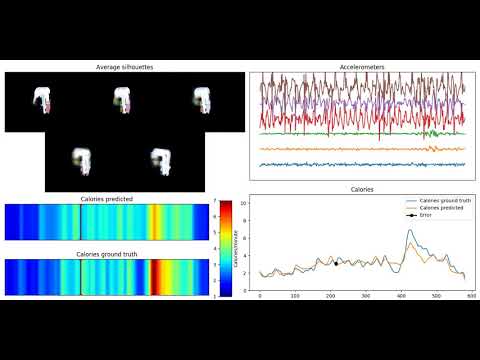

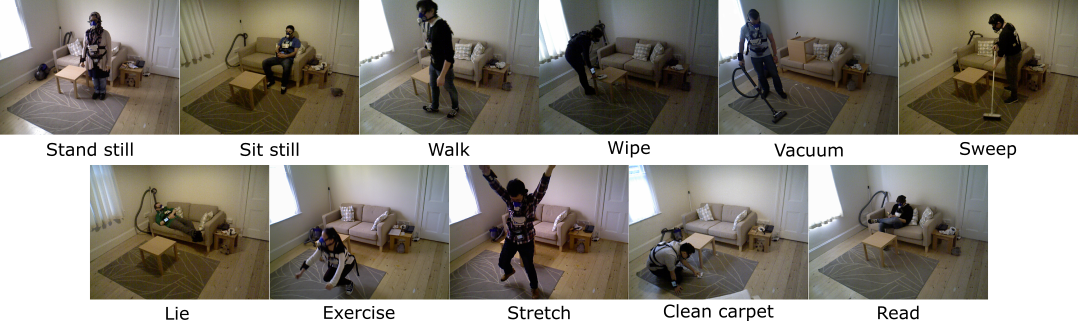

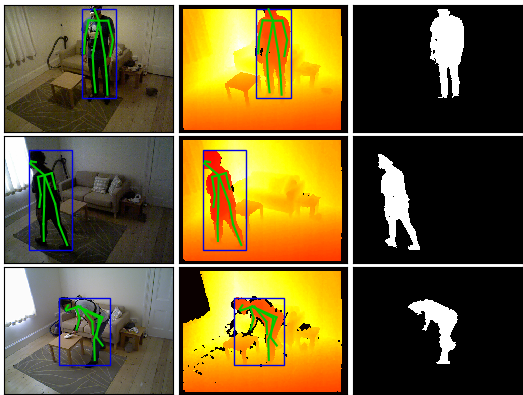

We recorded a number of people with a Kinect camera and accelerometers while performing some typical activities in a house and we then measured the calories burnt using a portable CO2 device.

Using the 3D skeletons provided by the Kinect we transformed the images into silhouettes so that the algorithm can be deployed into privacy-sensitive environments.

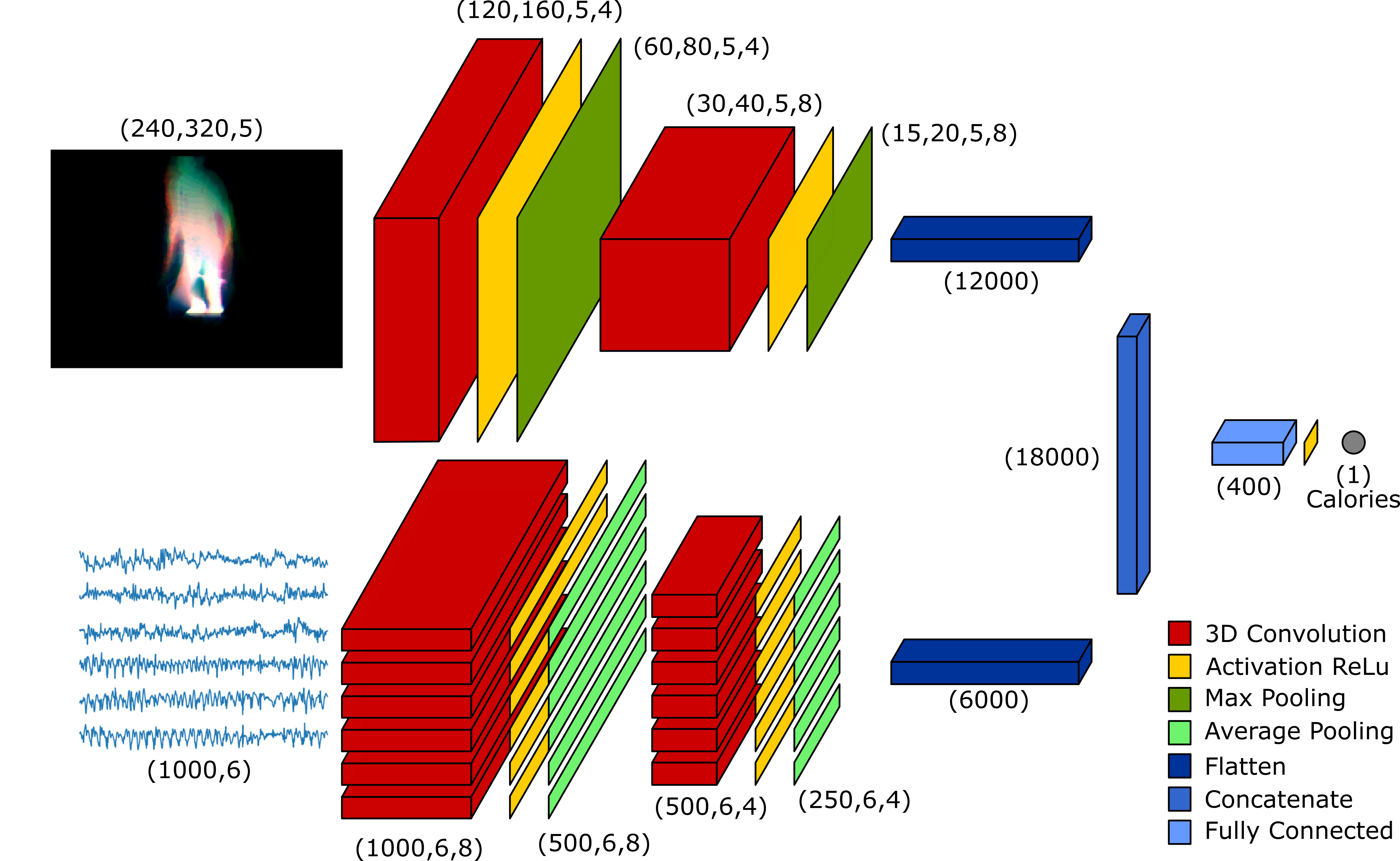

We then trained a Convolutional Neural Network to estimate the calories burnt using silhouettes, acceleration from the wearables and a combination from the two.

The following video shows a comparison with our prediction and the ground truth provided for a validation subject:

The method shows really good agreement with the actual calorie profile and performs better than previously proposed methods found in the literature. To read more about this project, please check out this publication:

CaloriNet: From silhouettes to calorie estimation in private environments

Masullo A., Burghardt T., Damen D., Hannuna S., Ponce-López V. & Mirmehdi M.

September 2018, British Machine Vision Conference.

PhD Research

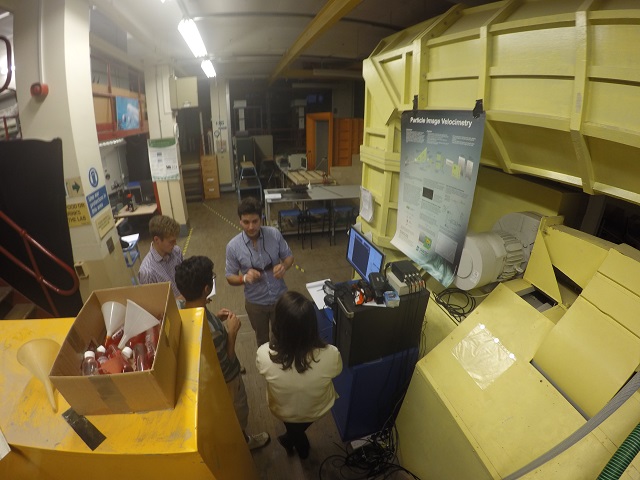

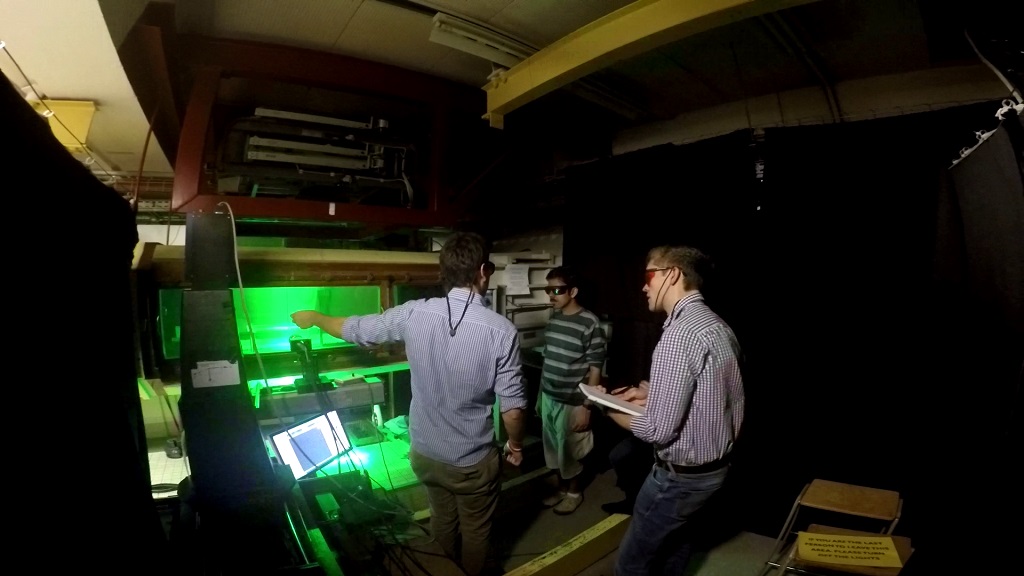

During my PhD I worked on improving a technique called Particle Image Velocimetry that is used in Aerospace Engineering to measure the velocity field in wind tunnel experiments. The technique involves spraying very thin oil particles in the wind tunnel and taking pictures of them while moving around aerodynamic objects. The analysis of the movement of the particles reveals the underlying flow velocity (tiny red arrow in the gif).

My research was aimed at improving this technique in particularly challenging conditions like reflective surfaces, highly curved objects and strong outliers. My PhD thesis is available here and the publications that resulted from it are available here.

During my PhD, I also had the opportunity to teach several labs, including PIV Lab, Programming, Fluid Dynamics, Compressible Fluid Dynamics, Thermodynamics and Engines.